A well-trained Helper Chat Bot is a great way to boost workplace efficiency. During working hours, it can reduce time spent on common or lower complexity inquiries. This in turn will allow agents more free time to be spent on more complex issues. During off-hours on nights and weekends, a Helper Bot can continue to answer questions as well. This can reduce support ticket backlog for the team that has built up during the time away.

Whether during working hours or non-working hours, a Helper Chat Bot is also a way to ensure that a visitor gets an immediate response without having to wait. Many times the immediate response will answer the question asked right away, but even when this is not the case the visitor will still receive an immediate response that the team will respond as soon as possible, with the ability to ask any other question while they wait. In this post we will be talking about our experience with setting up our bot.

We will look at whether it has been improving over time as the bot has been trained. Challenges that are specific to our Helper bot will be discussed, including common reasons for incorrect bot responses with some examples.

If you’d like to start experimenting with chat bots on your own site, Formilla offers a 15-day free trial of our platform, complete with Helper Chat Bots. Visit the Formilla pricing page for more information and to compare plan features.

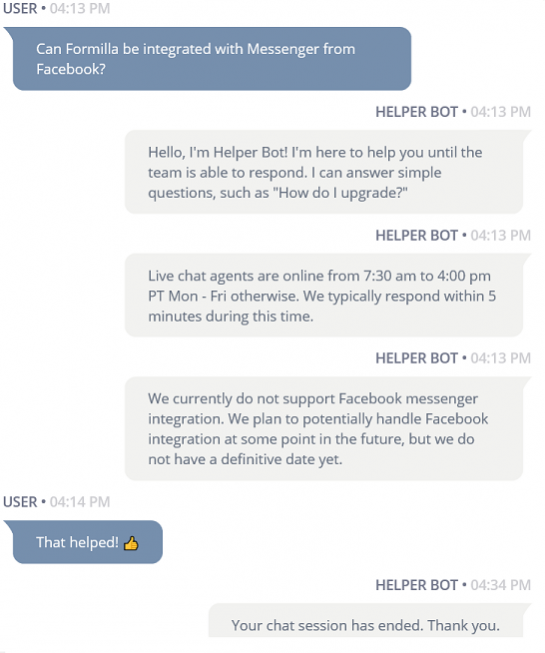

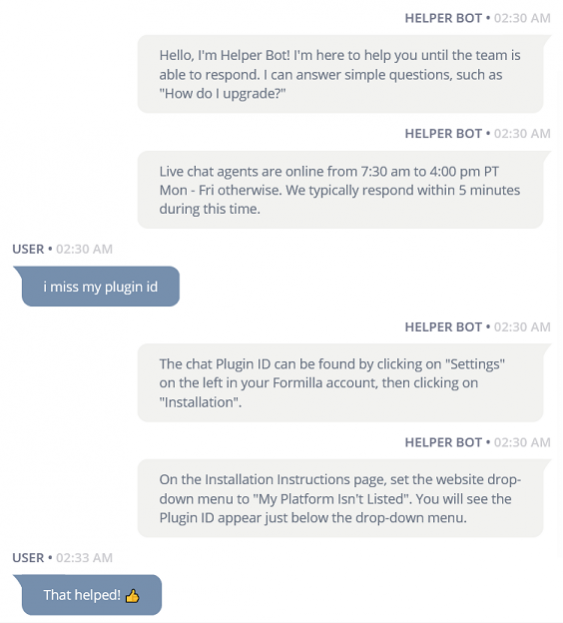

We began by creating around 40 initial questions to start. We included several variations for each question right away as well, adding the suggested 10 variations each or as close as possible. Answers for each question were added. In many cases, we may have already had Canned Messages that easily translated into a bot response for the answer. At first, the bot was switched on at the beginning of the workday, and then disabled before leaving in the evening. We wanted to ensure it was accurate enough to be of benefit after hours before leaving the Helper Bot on overnight (roughly 12 weeks before the bot was left on overnight). The bot is currently left on overnight, below are some samples of the bot being left on after hours.

Example A:

Example B:

Training was conducted roughly every 2-3 days, with training typically taking about 30 minutes per session. At first several variations or even new questions were added each session, and sessions were likely longer than 30 minutes. Longer training sessions were the norm for us at first, and likely would be for others as well… you cannot possibly think of every single question (or variation of a question) that a visitor would ask that could make a great trained Helper Bot response. This means that earlier training sessions are probably more likely to have a lot of new question creation, while later sessions will be mostly assigning variations to the correct existing question or deleting phrases that are not pertinent with less time needed to create entirely new questions.

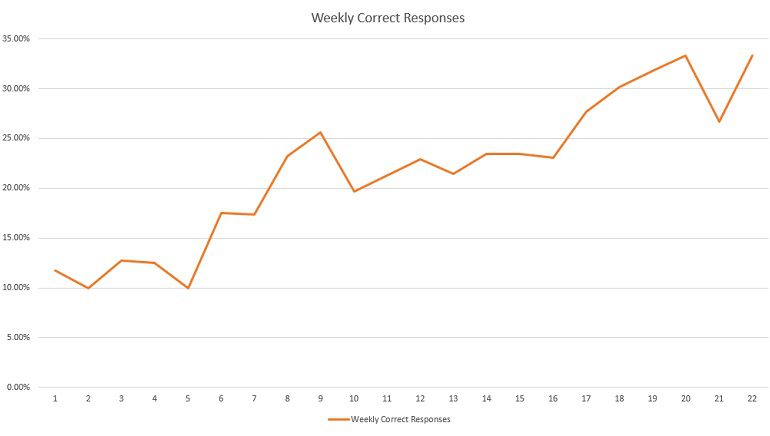

The Helper Bot has been running for a total of 22 weeks. In the below graph we have charted the Bot’s performance over this time. The orange line marks the percentage of chats that were correctly responded to for that week. This has trended upward overall, with recent weeks now generally staying over the 30 percent range. This represents 3,080 successfully responded to chat submissions out of 14,130 in total.

We have an international customer base, so our bot performs slightly worse since it is just using an English A.I. engine right now. By our estimate accuracy could be probably 5% to 10% better by isolating an English powered bot for just English-speaking visitors, then similarly setting up different bots for other languages (isolating Spanish-speaking visitors to a Spanish powered bot, for example). A response has also been set up for “I don’t understand this language”, where the Helper Bot will give a response that a live person will get back to them by using Google Translate. This “question” became very accurate after a few weeks of adding most non-English phrases, though of course this does not actually answer a visitor question (and is less likely to fire successfully for less frequently seen languages).

Our customers that have mostly domestic (not international) clients in their native country should see significantly better results over time. Keep in mind that the bot engine works in 12 native languages where the AI engine can interpret Spanish, French, Chinese, and more. If you have different language pages with a Spanish widget appearing on Spanish pages and English widget appearing on English pages, you can also have a Spanish based bot for the Spanish widget and an English based bot for the English widget for example. This should also be more accurate than our current setup that uses one English based bot for international customers.

We also have some traffic that arrives to our site by clicking on “Powered by Formilla” branding, so they reach us rather than the party they intended to. This contributes to some misses, though we try to filter out phrases that we recognize as being “wrong site” submissions. Depending on the phrase submitted, it may not be possible to tell “our site” from “wrong site” irrelevant phrases when it is time to train all collected phrases. Phrases that are not correctly noticed during training would be adding to the miss count.

We have our Helper Bot set to a confidence level of 75%, which is currently the default confidence level setting for newly created bots. This has not been adjusted, since so far most misses seem to be more appropriately addressed by simply adding variations while training regularly instead. Our Helper Bot is set to deactivate after 3 incorrect responses, this is also the default value currently for newly created bots. This has not been changed since so far it seems to be a good balance for both visitors who want to continue a few times to try and ask a question, as well as visitors who are maybe just adding additional details to their first question (but not actually asking anything new).

One of the common reasons for misses has to do with chat being a communication venue that doesn’t always lend itself to perfectly phrased questions. Many visitors will essentially “type like they text”. This can lead to responses that probably would have been answered correctly, but the question was not asked in one complete sentence.

In this example, we do have a question created for display rules on a specific page. With the question broken into separate lines as they are in the picture however, the correct response is not hit on to a high enough confidence level for the bot to respond. Not only that, but it cannot determine an answer for the rest of the “broken line typing” here either, so the bot is actually likely to be deactivated at this point (at which point I jumped in to finish the chat).

It is worth mentioning that we configured our initial greeting message to try and encourage visitors to ask simpler, more direct questions. This not only hopefully encourages asking a question in a complete sentence to avoid broken line typing, it also discourages using long questions that are multiple sentences. The additional attempts (up to 3 incorrect attempts in a row) support the ability to try a few different questions.

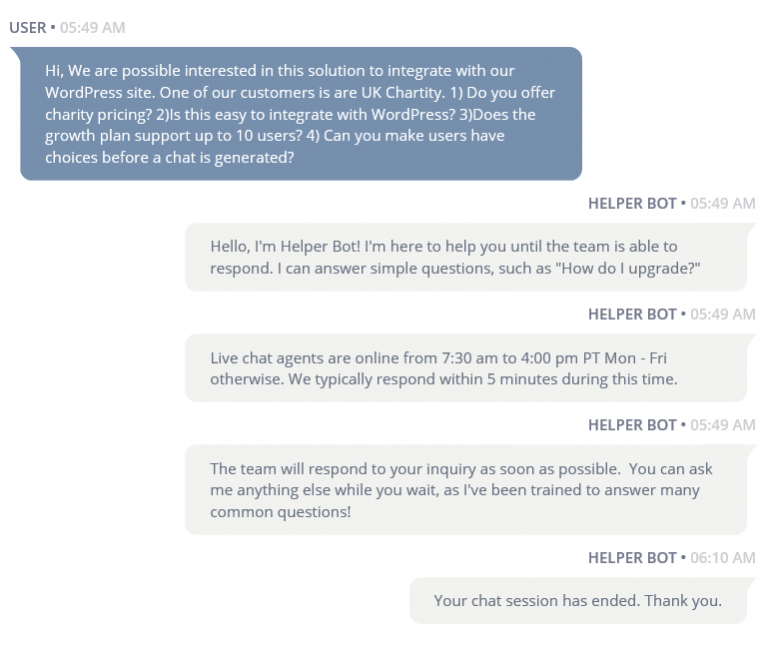

Another common reason is the visitor has a chatting style that is the opposite of broken line typing… instead the visitor types an entire paragraph rather than one sentence. Depending on how close an individual chunk or phrase matches to a saved question or variation, there may still be a match when this happens. Most of the time though, this means the customer asked more than one individual question, so there will be multiple possible correct matches and the Helper Bot cannot settle on just one.

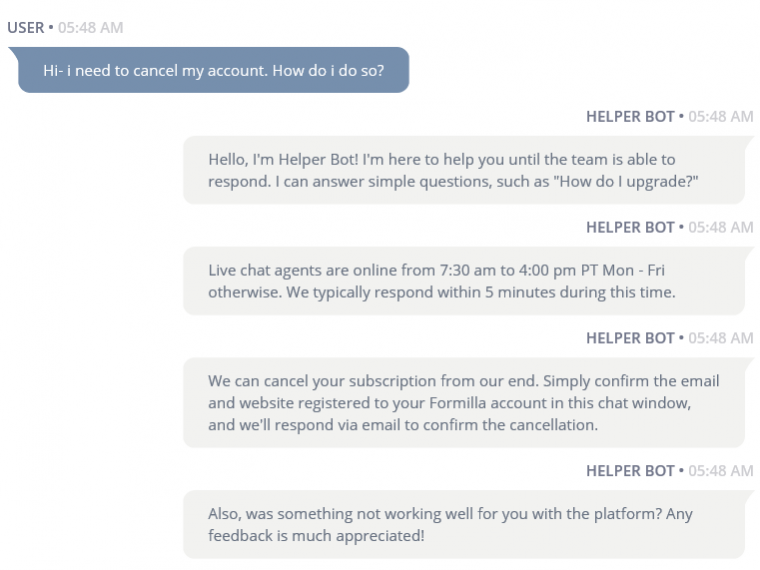

Sometimes a question is very thoroughly trained, but visitors will still manage to find a way of asking the same question in such a completely different way that the bot is not able to hit on the correct response successfully. We have a very well-trained cancellation response for example, it generally works great for anything even moderately close to “how do I cancel my subscription?”.

Even so, eventually somebody can find a creative way to ask the same question in such a way that the Helper Bot does not detect the correct response.

In this case, the new way to ask a familiar question can simply be added as a variation. While you may not have thought of every way to ask the same question, the new way a visitor asks can easily be added so the bot can answer correctly the next time.

When training your Helper Bot, you do have the option to either delete a phrase or to assign “Ignore” as a response rather than picking an existing question to match it to or creating an entirely new question. Ignore will train the bot to not respond at all to that phrase, while deleting removes the phrase entirely.

Fairly early on in training, we did notice that adding phrases to Ignore is best done sparingly. Ignore can be a matched response based on all the variations that have been added to the Ignore option, just like any other question. This means that you need to consider if the phrase can be closely word matched to legitimate questions that have been created. In addition, if you begin to gather a fairly sizeable “library” of ignored phrases, then new chat questions that might otherwise have received an “unknown” answer might actually start being ignored, simply because Ignore is weighted with so many responses.

For example, say that somebody had navigated to our site in error and had said “I want to cancel my insurance policy, I don’t see the option”. The first instinct might be to select “Ignore” during Helper Bot training. The visitor was talking to the wrong site after all, so in a perfect world the bot would not respond with anything. However, this may be easily confused with legitimate requests that were supposed to be directed to us about cancelling a subscription. We recommend using Ignore only for cases that certainly cannot be matched at all to any legitimate question, like complete gibberish from someone just testing out your chat widget for example.

The Delete option completely removes the phrase from the bot. In the above example with someone cancelling their insurance policy, it is best to simply delete the phrase rather than run the risk of inadvertently training the bot to ignore similar requests from people who were actually trying to reach us. You may also get legitimate questions that you would prefer to not have a programmed bot response as well. Perhaps it requires a complicated example that you would prefer a live person only try to answer, so the “unknown” response is what you would prefer the bot respond with. In this case you would want to choose Delete. This ensures that the question still gets the unknown response and prevents the Ignore library from getting too large.

We have more tips and best practices gathered at the end of our Helper Bot Tutorial. Feel free to reach out via chat or support@formilla.com if you need any help with your own Helper Bot!

Read more about how to add a chat bot to your site.